# Guiji DUIX H5 SDK Documentation

# Installation

npm i duix-guiji-light -S

# Quick Start

import DUIX from 'duix-guiji-light'

const duix = new DUIX()

duix.on('intialSucccess', () => {

// Start session

duix.start({

openAsr: true

}).then(res => {

console.info(res)

})

})

// Initialize

duix.init({

conversationId: '',

sign: 'xxxx',

containerLable: '.remote-container'

})

Note: Do not put the duix instance in Vue's data or React's state (no need to make it reactive).

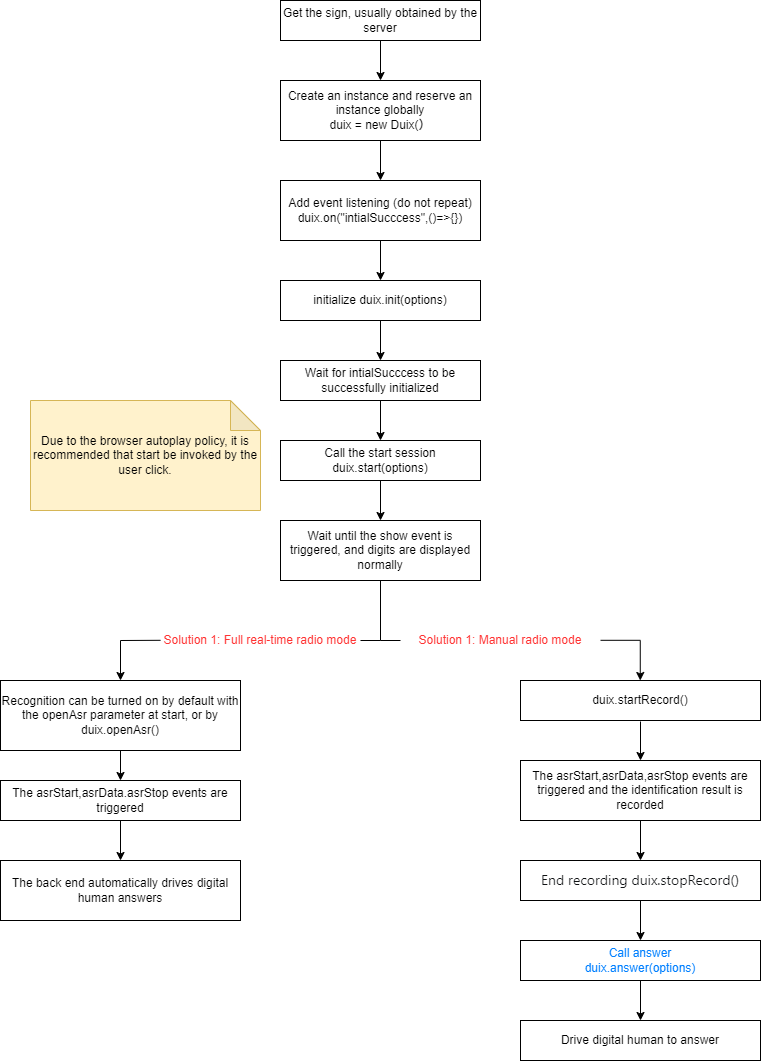

# Call Flow

# Methods

# init(option: object): Promise

duix.init({ sign: '', containerLable: '.remote-container', conversationId: '', })

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| containerLable | string | Yes | Digital human container. The digital human will be rendered in this DOM. |

| sign | string | Yes | Authentication string. How to get sign? (opens new window) |

| conversationId | number | Yes | Platform session ID |

# start(option:object): Promise

Calling the start method will begin rendering the digital human and start interaction.

Note: This method should be called after the

intialSucccessevent is triggered. TheintialSucccessevent indicates that all resources are ready. For example:duix.on('intialSucccess', () => { duix.start({ muted: true, wipeGreen: false, }).then(res => { console.log('res', res) }) })

Parameters

| Key | Type | Required | Default | Description |

|---|---|---|---|---|

| muted | boolean | No | false | Whether to start the digital human video in mute mode. Important Note: Due to autoplay policy (opens new window) restrictions, if your webpage hasn't had any user interaction yet, please set this parameter to true, otherwise the digital human will not load. If you have such a requirement, it's recommended to start in mute mode first, and design an interaction in your product, such as a "Start" button to call duix.setVideoMuted(false) |

| openAsr | boolean | No | false | Whether to directly enable real-time recognition. Setting this to true is equivalent to calling the openAsr method immediately after start |

| wipeGreen | boolean | No | false | Whether to perform green screen removal on the video. A pure green background image needs to be uploaded when creating a session on the platform |

| userId | number | No | User unique identifier |

# setVideoMuted(flag:boolean)

Set whether the digital human video is muted, true for muted, false for unmuted.

# break()

Interrupt the digital human's speech

# speak(option: Object): Promise

Drive the digital human to speak, supports text driving and audio file driving.

duix.speak({content: '', audio: 'https://your.website.com/xxx/x.wav'})

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| content | string | Yes | Text for the digital human to speak |

| audio | string | No | Audio URL for the digital human's response, can be obtained through getAnswer |

| interrupt | boolean | No | Whether to interrupt previous speech |

# answer(option: Object): Promise

Digital human answers questions

duix.answer({question: 'xxx'})

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| question | string | Yes | Question text |

| interrupt | boolean | No | Whether to interrupt previous speech |

# getAnswer(option: Object): Promise

Get answers to questions from the platform

duix.getAnswer({ question: 'How is the weather today?' })

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| question | string | Yes | Question text |

| userId | number | No | Business-side user unique ID, when specified, memory function is enabled for answers |

Return data

| Name | Type | Description |

|---|---|---|

| answer | string | Text response from the digital human |

| audio | string | Audio URL of the digital human's response |

# startRecord():Promise

Start recording. Returns the current recording's MediaStream, which can be used to display wave animation.

# stopRecord():Promise

End recording

# openAsr():Promise

Enable real-time speech recognition (note that this method should be called when show is triggered). After enabling real-time speech recognition, you can listen to the asrResult event to receive recognition (text) results.

# closeAsr():Promise

Close real-time speech recognition.

# stop()

Stop the current session. It is recommended to call this method in the page unload event to prevent current session resources from not being released in time when refreshing or closing the webpage.

window.addEventListener('beforeunload', function(event) {

if (duix) {

duix.stop()

}

});

# getLocalStream()

Get local audio stream, convenient for external audio visualization and other functions

# getRemoteStream()

Get remote audio/video stream, convenient for external customization

# resume()

Resume playback, currently only for some mobile browsers where autoplay is still ineffective even when triggered by user operation. Can be resolved by triggering the resume method through user operation in the thrown 4009 error.

# on(eventname, callback)

Listen for events.

# Parameters

# eventname

Event name, see table below for details.

# callback

Callback function

# Return Format Description

For methods that return Promise, the parameter format is { err, data }. If err is not empty, it indicates the call failed.

# Events

| Name | Description |

|---|---|

| error | Triggered when there are uncaught errors. |

| bye | Triggered when the session ends. |

| intialSucccess | Digital human initialization successful. You can call the start method in this event's callback |

| show | Digital human has been displayed. |

| progress | Digital human loading progress. |

| speakSection | Current audio and text segment of digital human's speech (answer method will use streaming results, if calling speak separately, this event is consistent with speakStart) |

| speakStart | There is a slight delay between driving the digital human to speak and actual speech, this event indicates the digital human has actually started speaking |

| speakEnd | Digital human has finished speaking |

| asrStart | Single sentence speech recognition started. Will only trigger once during startRecord |

| asrData | Single sentence real-time recognition result. During startRecord, will add textList array containing all recognition results |

| asrStop | Single sentence speech recognition ended |

| report | Reports RTC network/video quality and other information every second |

# error

{

code: '', // Error code

message: '', // Error message

data: {} // Error content

}

error code

| Name | Description | data |

|---|---|---|

| 3001 | RIC connection failed | |

| 4001 | Failed to start session | |

| 4005 | Authentication failed | |

| 4007 | Server session abnormally ended | code: 100305 Model file not found |

| 4008 | Failed to get microphone stream | |

| 4009 | Browser cannot autoplay based on playback policy | Please consider muted playback or user operation to call start method |

# progress

Number type progress, 0-100

# speakSection

{

text: '', // Current segment text

audio: '' // Current segment audio URL

}